[Webinar] Harnessing the Power of Data Streaming Platforms | Register Now

Author: Robin Moffatt

We ❤ syslogs: Real-time syslog Processing with Apache Kafka and KSQL – Part 2: Event-Driven Alerting with Slack

In the previous article, we saw how syslog data can be easily streamed into Apache Kafka® and filtered in real time with KSQL. In this article, we’re going to see how […]

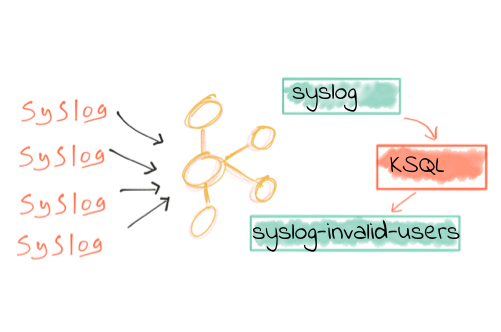

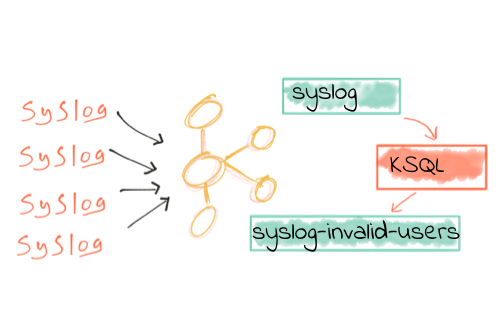

We ❤️ syslogs: Real-time syslog Processing with Apache Kafka and KSQL – Part 1: Filtering

Syslog is one of those ubiquitous standards on which much of modern computing runs. Built into operating systems such as Linux, it’s also commonplace in networking and IoT devices like […]

KSQL in Action: Enriching CSV Events with Data from RDBMS into AWS

Life would be simple if data lived in one place: one single solitary database to rule them all. Anything that needed to be joined to anything could be with a […]

No More Silos: How to Integrate Your Databases with Apache Kafka and CDC

One of the most frequent questions and topics that I see come up on community resources such as StackOverflow, the Confluent Platform mailing list, and the Confluent Community Slack group, […]

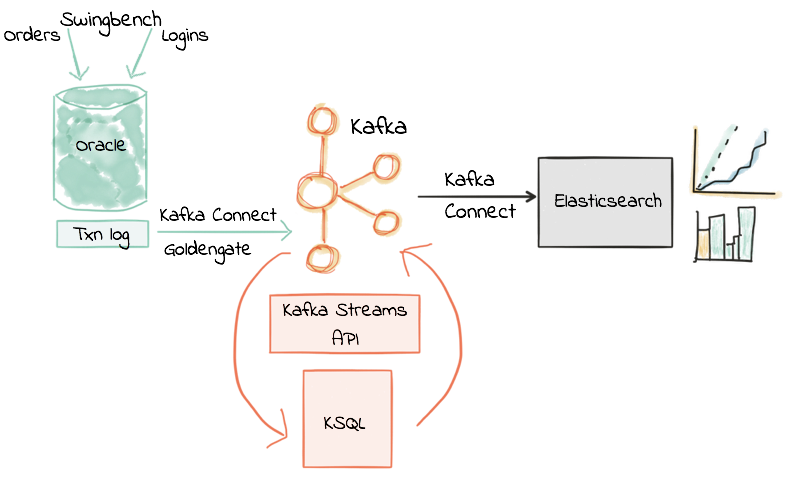

KSQL in Action: Real-Time Streaming ETL from Oracle Transactional Data

In this post I’m going to show what streaming ETL looks like in practice. We’re replacing batch extracts with event streams, and batch transformation with in-flight transformation. But first, a […]

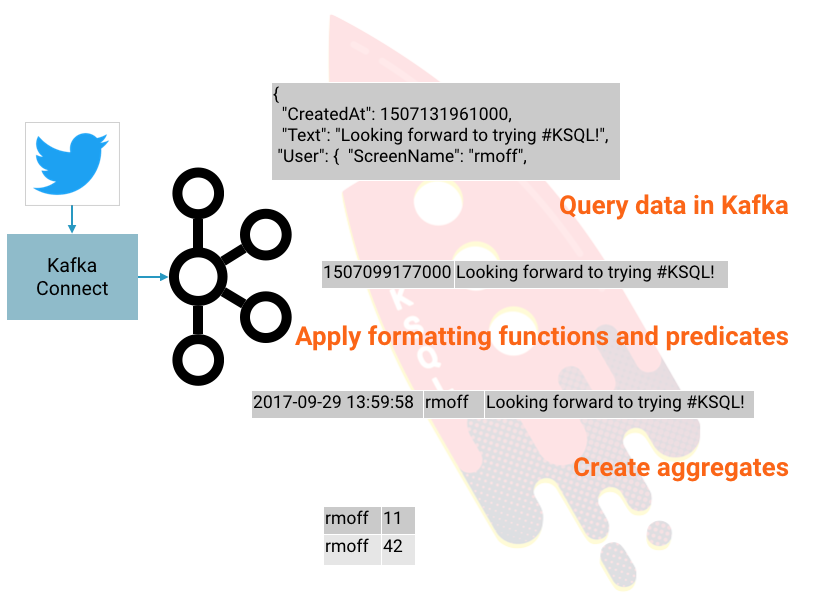

Getting Started Analyzing Twitter Data in Apache Kafka through KSQL

KSQL is the streaming SQL engine for Apache Kafka®. It lets you do sophisticated stream processing on Kafka topics, easily, using a simple and interactive SQL interface. In this short […]

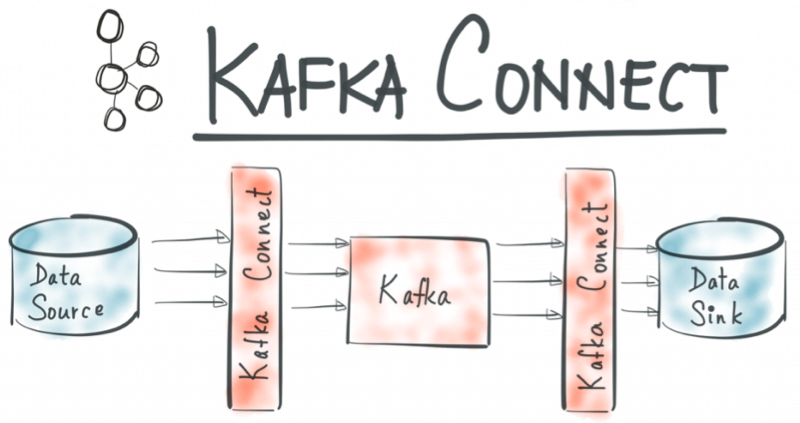

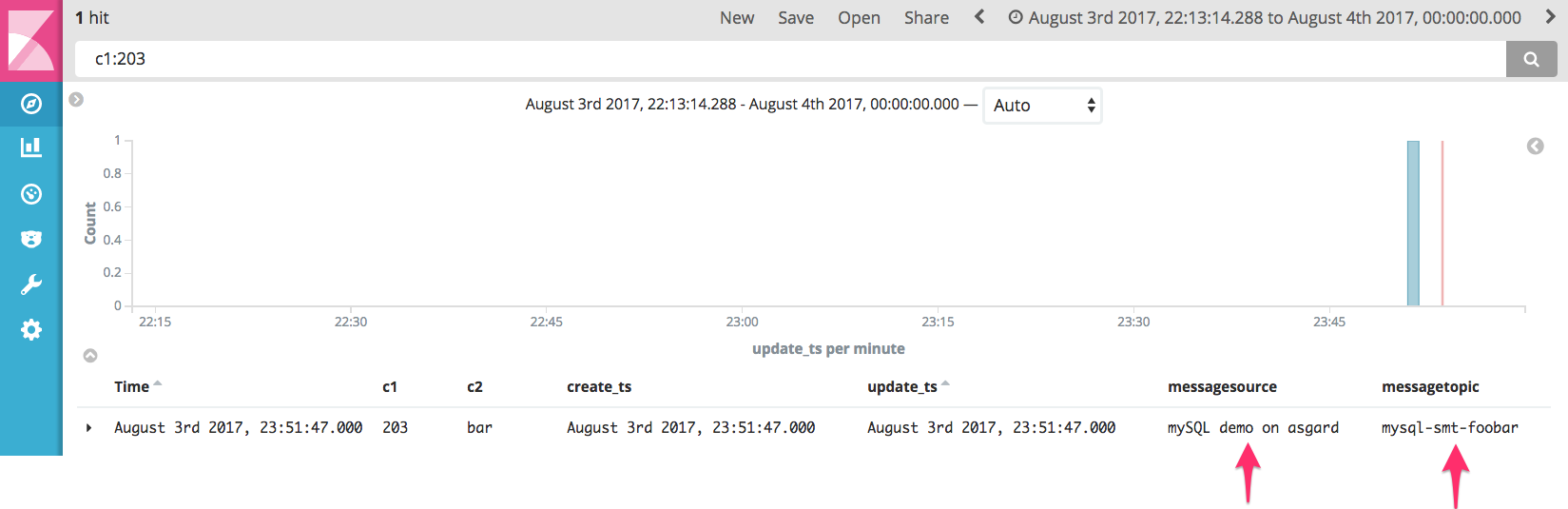

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 3

We saw in the earlier articles (part 1, part 2) in this series how to use the Kafka Connect API to build out a very simple, but powerful and scalable, streaming […]

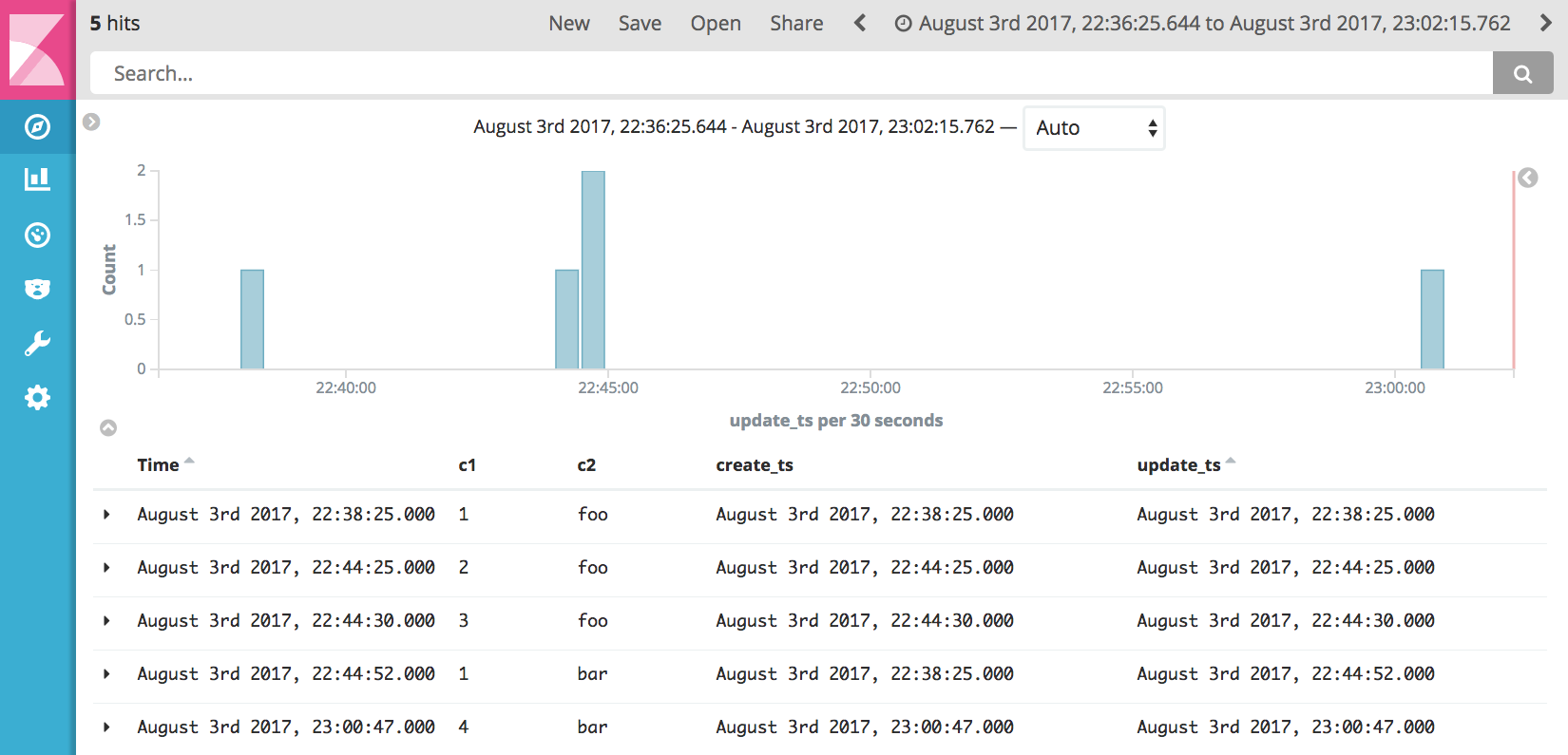

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 2

In the previous article in this blog series I showed how easy it is to stream data out of a database into Apache Kafka®, using the Kafka Connect API. I […]

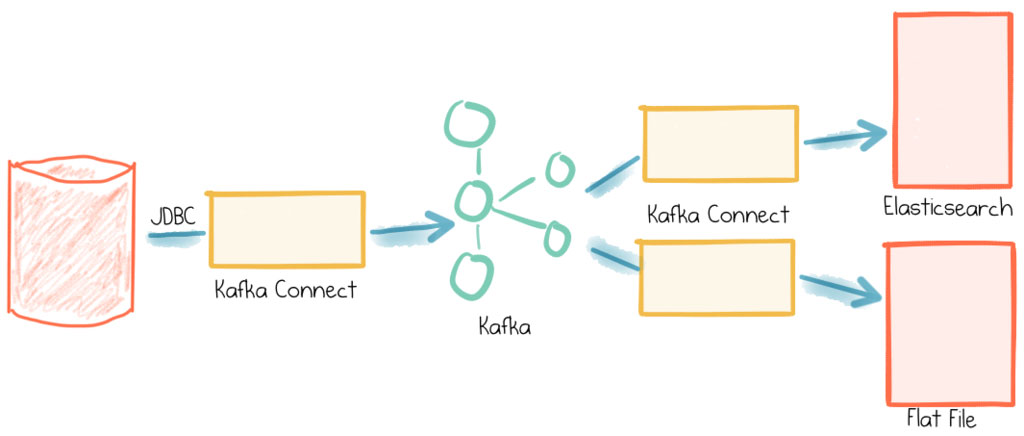

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 1

This short series of articles is going to show you how to stream data from a database (MySQL) into Apache Kafka® and from Kafka into both a text file and Elasticsearch—all […]

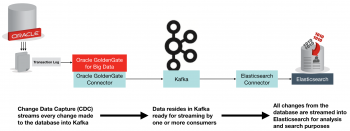

Streaming Data from Oracle using Oracle GoldenGate and the Connect API in Kafka

Note As of February 2021, Confluent has launched its own Oracle CDC connector. Read this blog post for the latest information.