[Webinar] Harnessing the Power of Data Streaming Platforms | Register Now

Why I Can’t Wait for Kafka Summit San Francisco

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

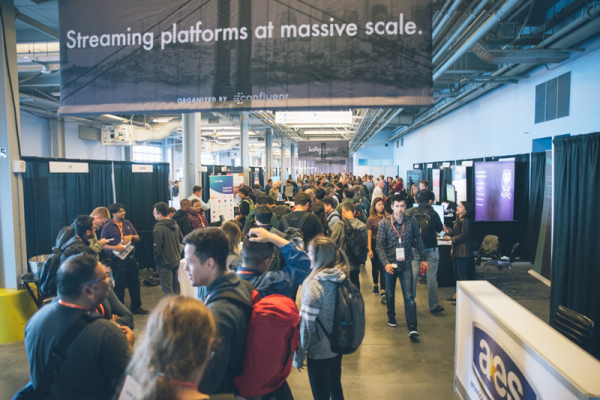

The Kafka Summit Program Committee recently published the schedule for the San Francisco event, and there’s quite a bit to look forward to.

For starters, it is a two-day event, which means we get to attend 14 talks, miss out on 42 talks (that we’ll later watch on video), and spend two days hanging out with our favorite community friends.

While the keynotes have not been announced yet (they will be soon!), there are quite a few exciting talks that are not to be missed. Of course, this is entirely personal—depending on your role, interests, and the different ways you use Apache Kafka®, you’ll find different talks exciting—and this is basically the reason there are four tracks.

Interests evolve over time too. I remember two to three years back, I spent all my time listening to talks about various ETL architectures in the Pipelines track. Last year, I attended mostly sessions about event-driven microservices, and this year, I’m especially interested in talks about running Kafka at scale and internals—good thing there are many of those!

Here is just a sample of the talks I’m especially looking forward to:

- Kafka Cluster Federation at Uber: From the abstract, it sounds like Uber is scaling their Kafka deployment by running many Kafka clusters and somehow making them look like a single cluster to the client. I can’t wait to hear why did they decided to go in that direction, what the benefits are, and how are they are hiding these details from the clients.

- Tackling Kafka, with a Small Team: Since I’m familiar with the work that Jaren Glover and his team are doing, I know the answer is: “with lots and lots of automation.” It’s always fascinating to hear how top-notch teams are running Kafka in production.

- What’s the Time?…and Why? Even though I don’t work much with Kafka Streams these days, I really can’t miss a talk with this delicious of a title. It sounds like a great mix of theory and practice that is bound to be enjoyable and educational.

- Kafka Needs No Keeper: If there is one talk you really shouldn’t miss, this is the one. Two prolific Kafka committers share their ideas for removing the ZooKeeper dependency in Kafka and outline a plan on how the community can collaborate on this massive and exciting project.

- Achieving a 50% Reduction in Cross-AZ Network Costs from Kafka: This may seem like a niche discussion but…just imagine coming back to the office and presenting to your director detailed plans to cut network costs by half. The conference just paid for itself and more!

I could have gone on and on—there are so many good options, but lists can be overwhelming. I’ll be speaking at the event too and would love to see you there. You can register for Kafka Summit San Francisco using the code Gwen30 to get 30% off and take a look at the full agenda. Don’t forget to share your picks in the comments and on social media using #kafkasummit.

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

Data Streaming Platforms, Gen AI, and Apache Flink® Reigned Supreme at Kafka Summit London

As presenters took the keynote stage at this year’s Kafka Summit in London, an undercurrent of excitement was “streaming” through the room. With over 3,500 people in attendance, both in person and online, the Apache Kafka® community came out...

D.C. Data in Motion Highlights Data Streaming’s Impacts on Mission Effectiveness

The Data in Motion Tour brings Confluent experts together with partners and customers for an interactive day of discussions and demos of data streaming. The Washington, D.C. stop of the tour...