Introducing Connector Private Networking: Join The Upcoming Webinar!

Tags: Real-Time Data

From the Cellar to the Cloud – How Aedifion is Driving Next-Generation Building Automation with Confluent

It is no exaggeration that a lot is going wrong in commercial buildings today. The building and construction sector consumes 36% of global final energy and accounts for almost 40% […]

Building a Real-Time Data Pipeline with Oracle CDC and MarkLogic Using CFK and Confluent Cloud

Today, enterprise technology is entering a watershed moment, businesses are moving to end-to-end automation, which requires integrating data from different sources and destinations in real time. Every industry from Internet […]

Building Real-Time Data Systems the Hard Way

A few years ago I helped build an event-driven system for gym bookings. The pitch was that we were building a better experience for both the gym members booking different […]

8 Years of Event Streaming with Apache Kafka

Since I first started using Apache Kafka® eight years ago, I went from being a student who had just heard about event streaming to contributing to the transformational, company-wide event […]

Powering Microservices at SEI Investments with Event Streaming

We launched a transformation initiative three years ago that transitioned SEI Investments from a monolithic database-oriented architecture to a containerized services platform with an event-driven architecture based on Confluent Platform. […]

Providing Timely, Reliable, and Consistent Travel Information to Millions of Deutsche Bahn Passengers with Apache Kafka and Confluent Platform

Every day, about 5.7 million rail passengers rely on Deutsche Bahn (DB) to get to their destination. Virtually every one of these passengers needs access to vital trip information, including […]

Building a Real-Time, Event-Driven Stock Platform at Euronext

As the head of global customer marketing at Confluent, I tell people I have the best job. As we provide a complete event streaming platform that is radically changing how […]

The Top Sessions from This Year’s Kafka Summit Are…

This past April, Confluent hosted the inaugural Kafka Summit in San Francisco. Bringing together the entire Kafka community to share use cases, learnings and to participate in the hackathon. The […]

Introducing Kafka Streams: Stream Processing Made Simple

I’m really excited to announce a major new feature in Apache Kafka v0.10: Kafka’s Streams API. The Streams API, available as a Java library that is part of the official […]

Announcing Kafka Connect: Building large-scale low-latency data pipelines

For a long time, a substantial portion of data processing that companies did ran as big batch jobs — CSV files dumped out of databases, log files collected at the […]

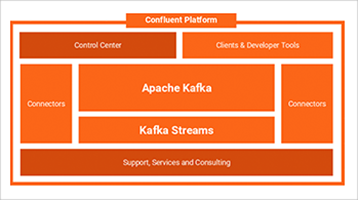

Confluent Platform 2.0 is GA!

We are very excited to announce the general availability of Confluent Platform 2.0. For organizations that want to build a streaming data pipeline around Apache Kafka, Confluent Platform is the […]

Apache Kafka Hits 1.1 Trillion Messages Per Day – Joins the 4 Comma Club

I am very excited that LinkedIn’s deployment of Apache Kafka has surpassed 1.1 trillion (yes, trillion with a “t”, and 4 commas) messages per day. This is the largest deployment of Apache […]

Compression in Apache Kafka is now 34% faster

Apache Kafka is widely used to enable a number of data intensive operations from collecting log data for analysis to acting as a storage layer for large scale real-time stream […]

Making Apache Kafka Elastic With Apache Mesos

This post has been written in collaboration with Derrick Harris from Mesosphere and Joe Stein, a Kafka committer. For an updated version of this article, please see Apache Mesos, Apache Kafka and […]